The gender of Japanese doctors

In my on-going but largely unsuccessful attempt to teach myself Japanese, I often have occassion to use Google Translate to translate sections of Japanese (children’s) books into English, and check my own attempts at writing. This is how I discovered that it will often insert gendered pronouns based on gender stereotypes. This is not a new problem for Google Translate - this issue was raised over 5 years ago and has since been tackled for a select number of languages (to and from English…)1. It is a shame that in those 5 years, the gender-specific translations have not been rolled out to other languages.

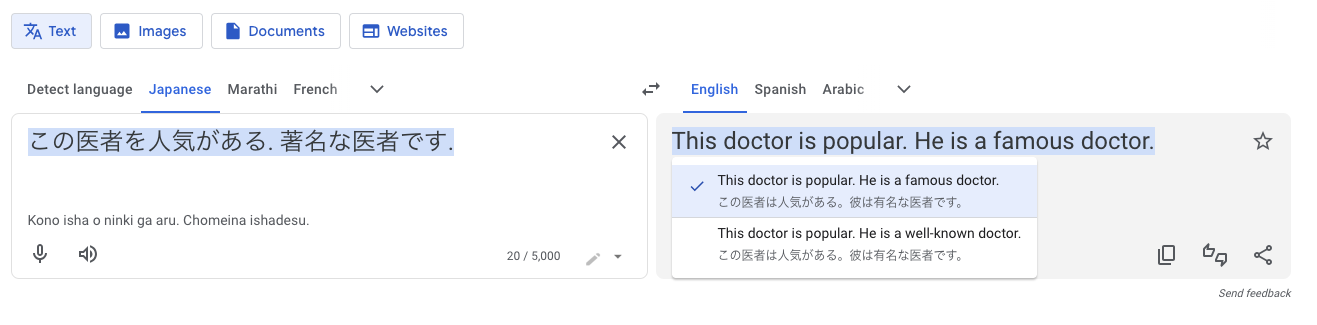

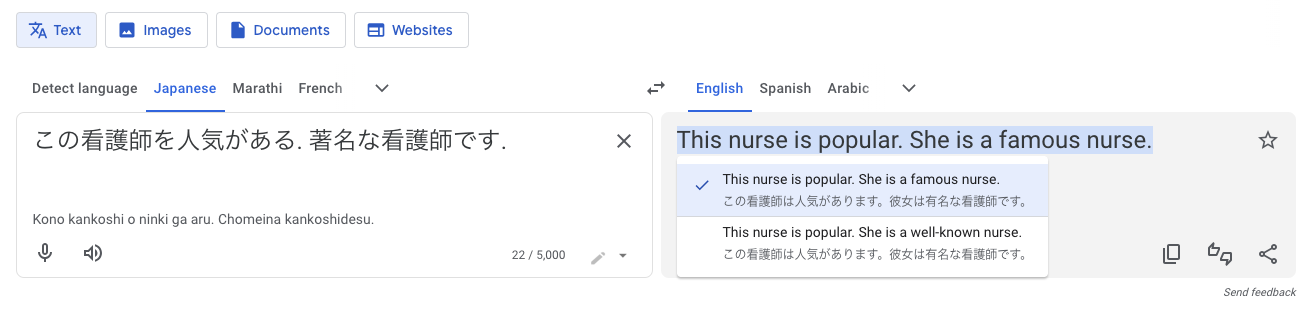

For example, if I want to translate a sentence about a popular doctor, I am served only translations with “he” pronouns, but if I want to translate a sentence about a popular nurse… you guessed it. She.

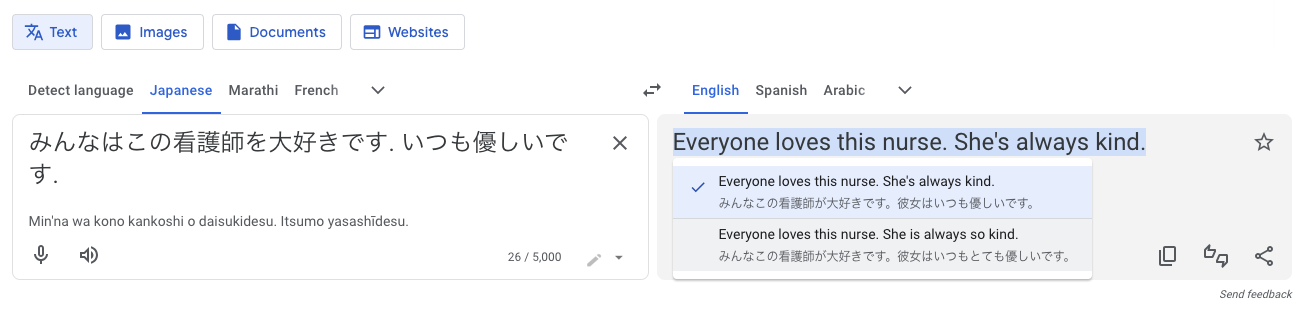

There were instances where both translations were offered, such as:

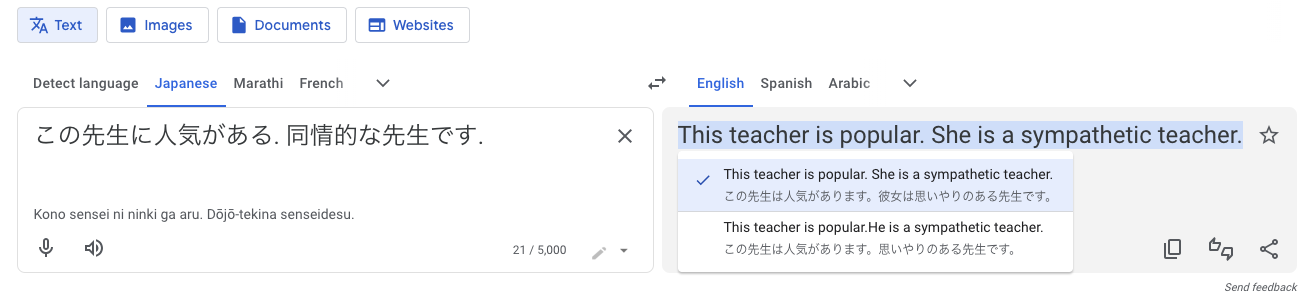

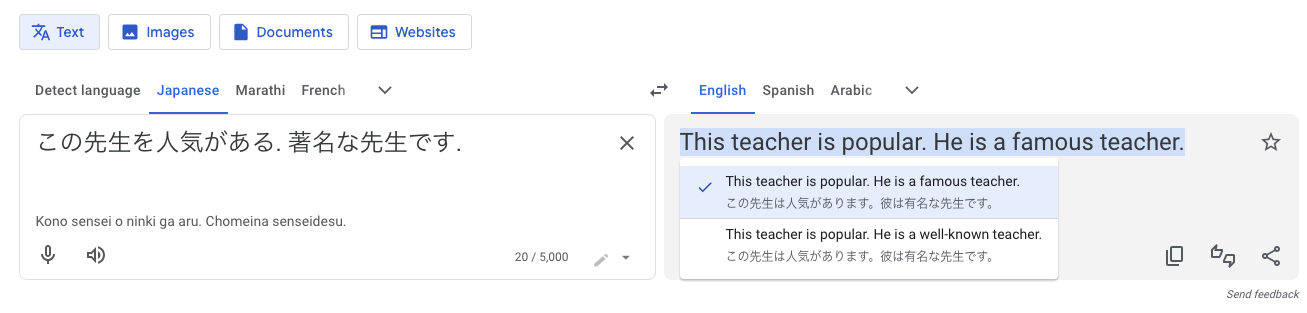

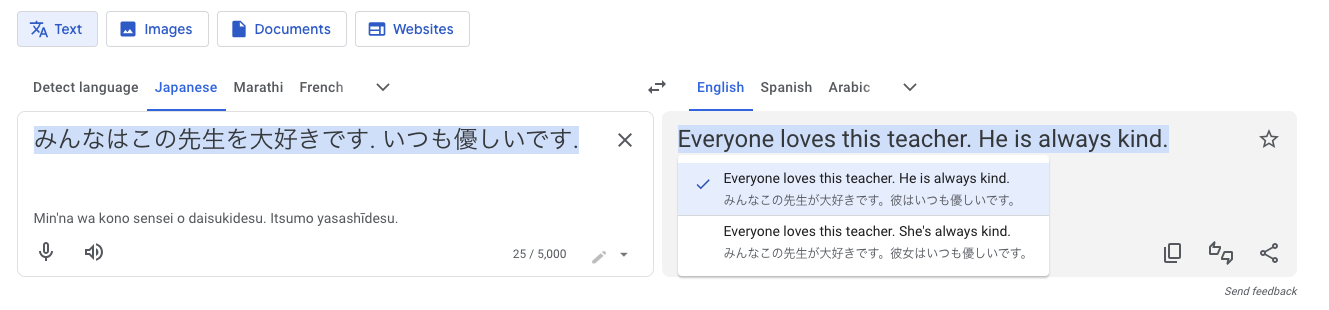

However, fame seems to be gendered for teachers, as in:

The selection of the pronoun appears to be conditioned on the content of the previous sentence, as in these contrasting examples (“this nurse” leads to “she” in the following sentence, but with “this teacher” multipe pronouns are offered)

Being offered multiple gender pronouns seems to happen most consistently for teacher - perhaps reflective of more balanced training data (around half of teachers are female in Japan2, whereas women massively dominate nursing3 and men dominate the profession of doctor4). Whatever the reason, it is clear that Google needs to employ more systematic handling of the translation of non-gendered-pronoun (such as Turkish) and pronoun-drop languages (such as Japanese, or Chinese, which I found had similar issues) into languages which require a gendered pronoun, such as English (or French, Arabic, Russian…). A best estimate based on (slightly messy) WALS data suggests around half the world’s languages have gendered pronouns and half don’t5. Work is needed to ensure gender biases don’t taint accuracy of translations: 頑張ってよ。

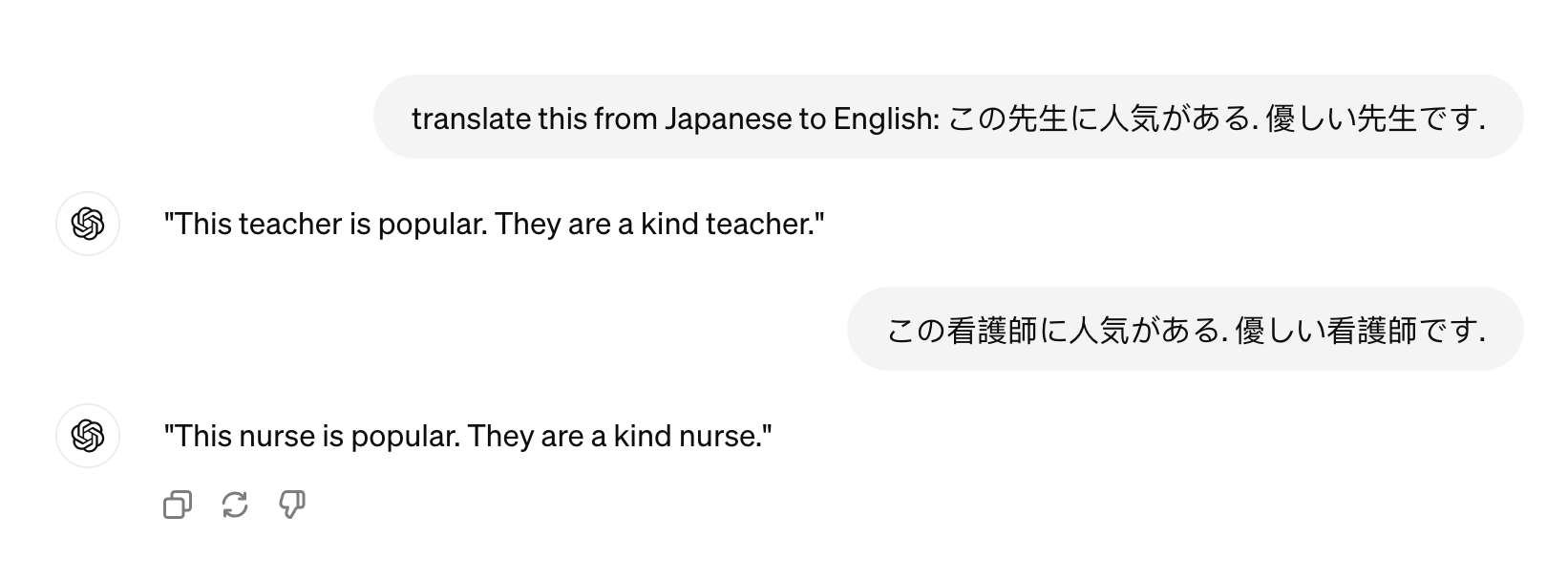

For comparison, ChatGPT translates sentences without a gendered pronoun in Japanese to “they” as in the following example:

I suspect this is the result of targeted alignment, because the amount of training data with “they” used about a specific person must be quite limited online. Whilst defaulting to “they” does have benefits (does not reinforce gender stereotypes), this can still result in misgendering (some people strictly prefer gendered pronouns). A more promising solution would be to offer multiple reasonable translations (as Google has offered for select languages), and even ideally allow the configuration of pronoun translation preferences (see 6 for a survey of community preferences).

I suspect this is the result of targeted alignment, because the amount of training data with “they” used about a specific person must be quite limited online. Whilst defaulting to “they” does have benefits (does not reinforce gender stereotypes), this can still result in misgendering (some people strictly prefer gendered pronouns). A more promising solution would be to offer multiple reasonable translations (as Google has offered for select languages), and even ideally allow the configuration of pronoun translation preferences (see 6 for a survey of community preferences).

p.s. As a far-from-native speaker of Japanese, my example sentences may have introduced a confound of “unnaturalness”. However, I believe that as long as a system is willing to offer a translation o a sentence, it should offer pronoun options.

-

https://www.theverge.com/2018/12/6/18129203/google-translate-gender-specific-translations-languages ↩

-

https://www.europarl.europa.eu/RegData/etudes/ATAG/2020/646191/EPRS_ATA(2020)646191_EN.pdf ↩

-

https://www.sciencedirect.com/science/article/pii/S1976131723000506#bib12 ↩

-

https://www.nippon.com/en/japan-data/h01978/ ↩

-

https://wals.info/feature/44A#5/2.608/16.66 ↩

-

https://aclanthology.org/2023.acl-long.23.pdf7 ↩