TL;DR Bias Mitigation Based on SCM

This blog post is intended as a summary of my bias mitigation paper for the general reader, with an indication of some of my motivations for conducting this work.

My paper on queerphobia in sentiment analysis highlighted to me that debiasing techniques that rely on a pre-defined list of identity terms often results in some of the most marginalised groups continuing to be harmed. As such I wanted to develop a technique for debiasing models that did not rely on predefined lists of identities or associated stereotypes (i.e. the association between being a lesbian and being butch, which is not a consistent stereotype about marginalised identities). To achieve the latter goal (debiasing without a list of identity specific stereotypes) I turned to the stereotype content model from psychology, a popular theory that argues that all stereotypes can be broken down into two main “axes” - how warm versus cold we think the group is (i.e. welcoming vs distant), and how competent versus incompetent we think the group is. A group’s “position” with regards to these two axes will then predict how we treat them, with the “cold + incompetent” groups being treated with disgust (a typical example of such a group being the unhoused population in developed countries).

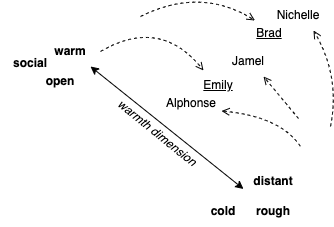

I combined this theory with a method for measuring how similar the mathematical representation (used by a language (word prediction) model) for a set of words is, and was able to show that a very popular language model associated white American names with positive warmth + competence terms, and black American names with cold + incompetence terms. The same was true for white American male names and Mexican American female names respectively. I then used a technique for adjusting the mathematical representation of the words to disassociate these names from the concepts of warmth and competence, by making them equally similar to the positive and negative “poles” of each concept (I visualise this below). When I tested a new set of names, their mathematical representations had also shifted, showing that this technique had gone some way to improving how equitably these demographic groups are represented in the model.

What I proposed was just a prototype, and needs to be tested on a larger range of identities, but by relying on an understanding of stereotyping that is not specific to any particular group, the method should be easy to extend. I had originally planned to focus on a technique for automatically generating identity terms to then “debias” the representation of, but I have begun to question the effectiveness of debiasing language models without regard to a particular use context. More on that soon…