Do better, now

In response to a new reading group run by the Edinburgh Effective altruism society advertising a course on safe AGI development (from OpenAI no less), I shared some thoughts on how to create more ethical, responsible technology now, which I share below:

I support work to tackle the risks of AI technologies, but as someone researching language technology harms, I just wanted to provide some additional, practical steps to mitigate harms of AI technologies as they exist right now, that you can implement in your current studies or future work.

You can:

- Document the data you compile using this framework which encourages you to consider the affected subgroups, risks to privacy, how annotation workers were compensated

- Generate model cards for you models to document intended use, define misuse, identify risks etc (here is a good example for the open science model Bloom)

- Analogously, read the documentation for data sets and models you use - are they suitable for this new task? Are you falling into the trap of assuming portability?

- Ask yourself: who is not included in the data, who isn't represented, which harms are not being discussed? Often these will involve the most marginalised people - how can your work address these issues?

- Pay your annotators a fair price and also consider whether your task may harm them in other ways i.e. through repeat exposure to abusive content (are you reliant on digital sweatshops in traditionally pillaged countries in the Global South?)

- Avoid use of LLM in any scenario where truth is important i.e. literature reviews

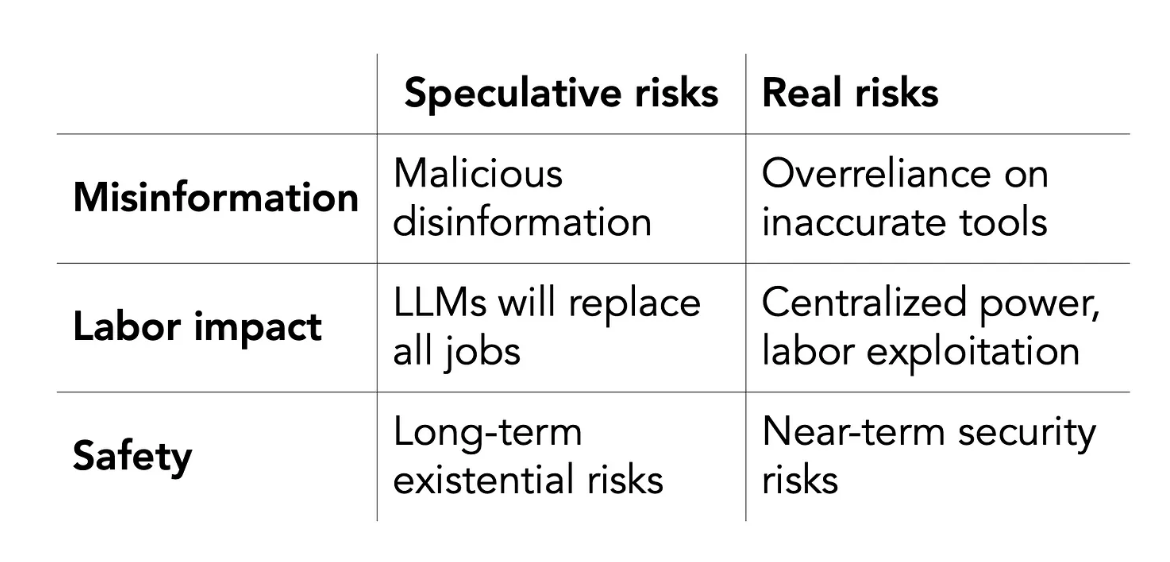

Also I would recommend you read this letter from the DAIR institute, this article entitled "Afraid of AI? The startups selling it want you to be", and this substack (from which the below is taken) for an alternative take on "the letter".