The Censorship of Art

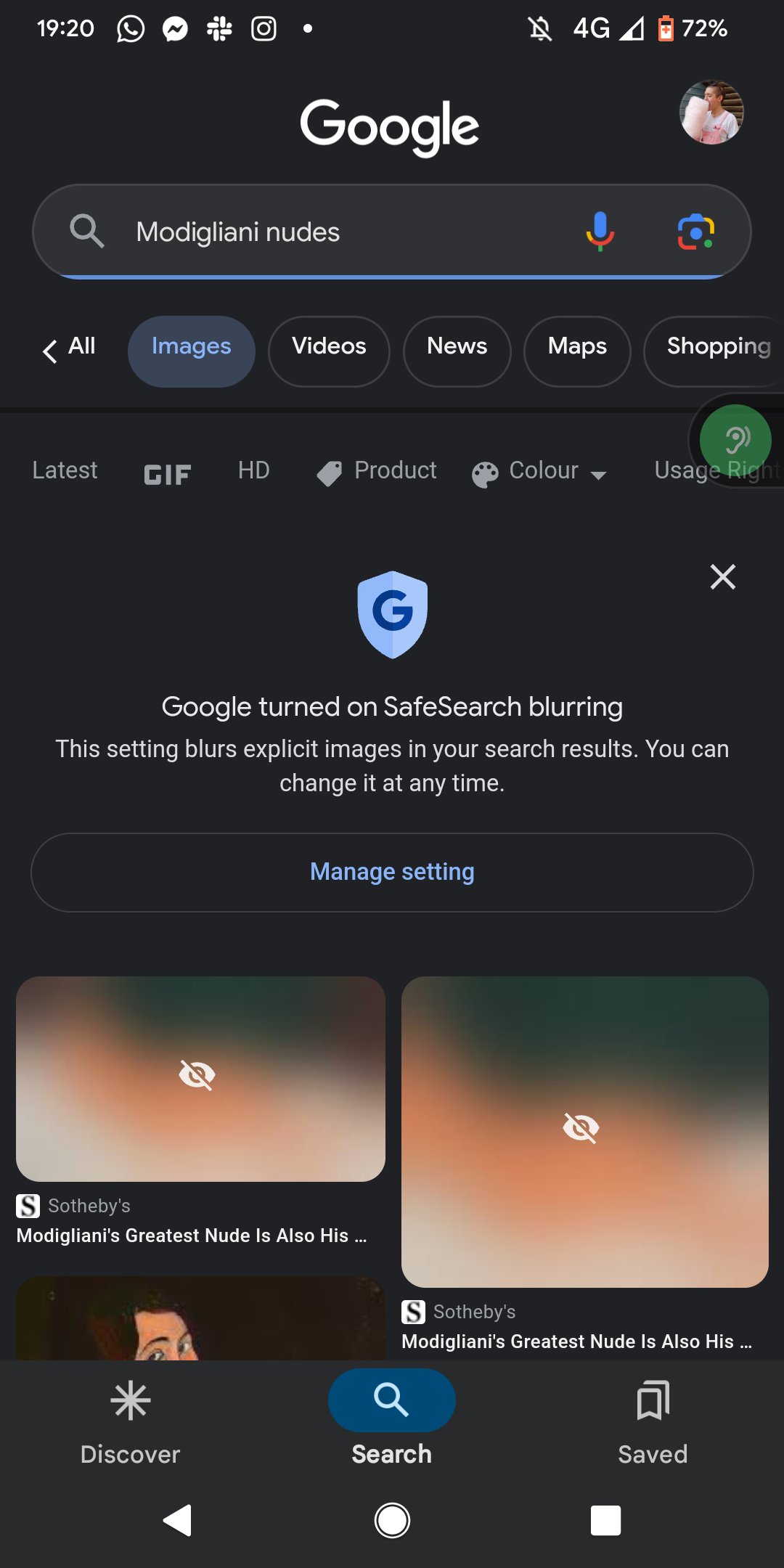

I was very surprised to discover the other day whilst searching for an example of work by one of my favourite artists, that SafeSearch with blur had been enabled on my Pixel device. Some of Modigliani’s most famous figures were returned to me as a series of beige blurs. A quick search informs me that Google has enabled blur as a new default “to ensure that children and teens don’t inadvertently view explicit content”1. I would argue a system that cannot distinguish between an oil painting by a modernist artist and pornography needs a lot more training… but it did give me a little opportunity for some experimentation.

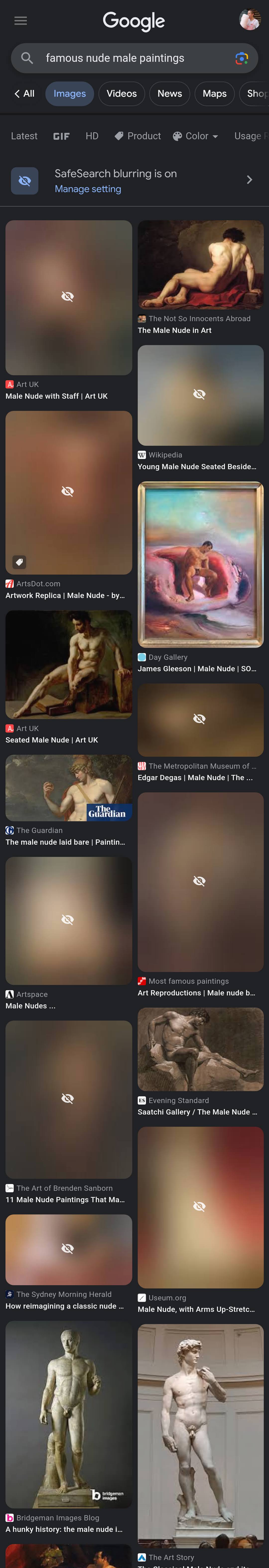

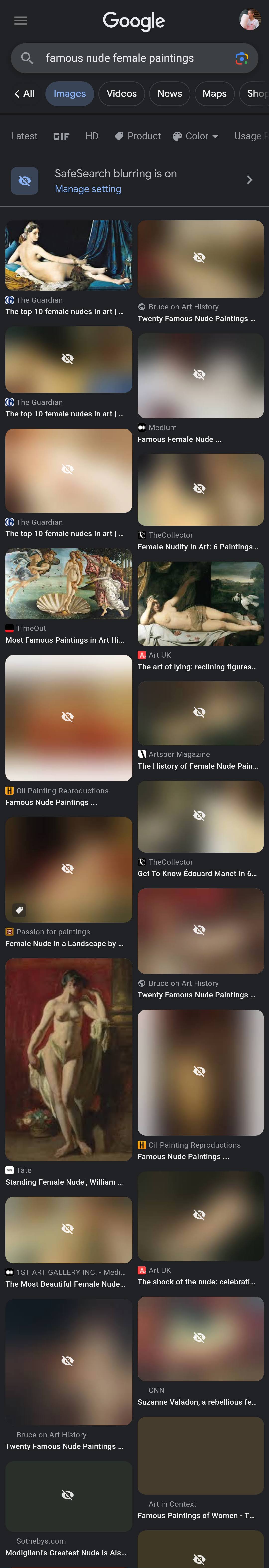

I was interested to see if male nudes were less censored than female nudes, and can provide some very crude anecdotal evidence. I found that “famous nude male paintings” returned about 9 of 16 images with a blur, where for “famous nude female paintings” 17 of the 21 top returned images were censored. Further experimentation is clearly required but it would not surprise me if the algorithm Google is using to detect nudity is deeply, deeply sexist, as is so often the case2.

I was interested to see if male nudes were less censored than female nudes, and can provide some very crude anecdotal evidence. I found that “famous nude male paintings” returned about 9 of 16 images with a blur, where for “famous nude female paintings” 17 of the 21 top returned images were censored. Further experimentation is clearly required but it would not surprise me if the algorithm Google is using to detect nudity is deeply, deeply sexist, as is so often the case2.

An art historian and psychologist might have something interesting to say about the fact that many of the “famous nude female paintings” were in landscape and thus more could fit on my phone screen at one time - I guess a naked man lying down would be a little too homoerotic? Also, the famous blurs are all distinctly at the “light” rather than “deep” end of the Fenty foundation spectrum, but racism in the art world is sadly hardly surprising.

In addition to the blur functionality, I also wonder if Google down-ranks potentially nude images, because I was quite surprised to find only a cropped (and thus nipple free) version of one of his famous nudes amongst the first 20 images returned in the search for “Modigliani famous artwork”. Even “Modigliani reclining figure” returned a surprising amount of irrelevant results (non-reclining figures), and pencil sketches - and a surprising lack of his famous warm-toned nudes.

The censorship of art is a serious issue. It stifles creativity and creates unnecessary taboos around nudity. Modigliani’s original exhibition of nudes was censored by police - over 100 years ago. Now Google Images’ SafeSearch is acting as a form of cultural police. Protecting young people on the internet means teaching them about how to keep safe online, and whilst safety filters of this sort may have a role to play in that, we should not at the same time be teaching them that nudity is inherently sexual and something to hide. Have we not moved on in 100+ years?

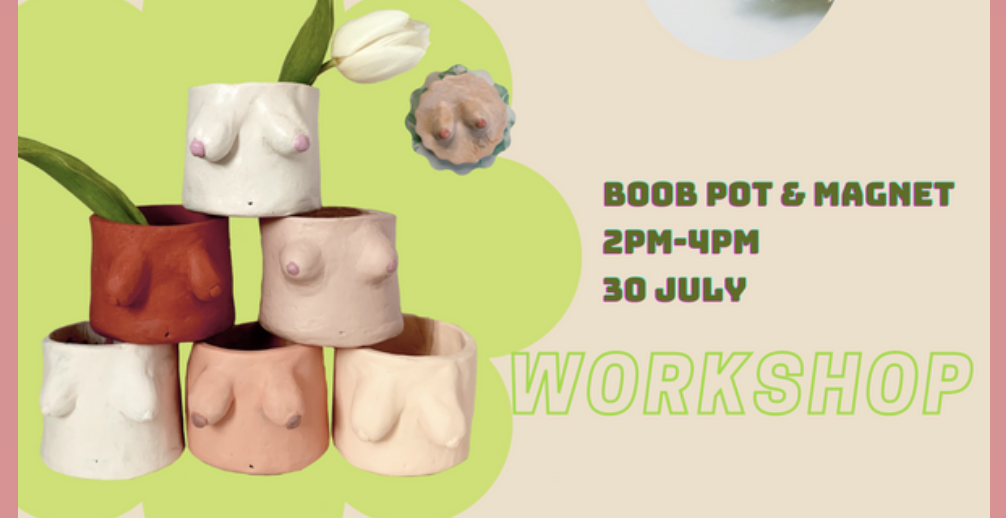

As an aside, my department had its only tiny scandal last year when someone dared advertise a ceramic nude pot (“boob pot”) making event on the general interest mailing list and had to apologise for causing offence. I find the idea of being offended by a crude ceramic rendering of a breast offensive in and of itself, but I saved the community from a reply-all war by writing this blogpost instead.

As an aside, my department had its only tiny scandal last year when someone dared advertise a ceramic nude pot (“boob pot”) making event on the general interest mailing list and had to apologise for causing offence. I find the idea of being offended by a crude ceramic rendering of a breast offensive in and of itself, but I saved the community from a reply-all war by writing this blogpost instead.

I leave you with the following GIF that helps to visualise issues with current nudity filters, taken from an excellent article on bias in algorithms designed to detect explicit content2: